Terrible through Technology

It Started with Darth Maul

Before I begin, the Star Wars prequels are terrible. While I don't claim that George Lucas "ruined my childhood" and all the other gibberish that was said around that time, I can say that I did lose interest in the franchise as a whole after seeing Phantom Menace. Maybe it was just my age or that I'd been becoming more interested in other genres. Maybe it was that I'd been reading the constant Darth Maul hype in Star Wars Insider magazine for months before seeing him have slightly more screen time than Godot. I'll never know.

Of course, if you were a fan of Star Wars at all, you have probably seen the Plinkett Reviews of the entire prequel trilogy. If you haven't, you should take the time to watch them, even if they are around half the length of the movies themselves.

I'm not a film buff nor do I know much about cinematography. I just like watching fun movies on occasion. In general, I tend to despise CGI effects. They take me out of the movie. Some CGI can enhance effects, but completely rendered scenes don't do much for me. However, I can see why filmmakers use them: they are cheaper, faster, and easier to produce than practical effects.

Plinkett mentions an adage that perfectly summarizes the difference between the original trilogy and the prequels: Art through Adversity. It's these three words that separate the craftsman from the journeyman. In technological fields, there is so little adversity that the number of craftsmen is fading.

Low-Level Knowledge

The journeyman photographer is given a camera. He is taught the basics of how the camera works. He can zoom and focus. He sometimes changes the camera's mode from Full Auto to Sports or Action Scene. He knows to keep the Sun at his back in the daytime. He knows how to bounce the flash in low light. He makes a living taking pictures for the local newspaper.

Our photographer hears of a bear who has recently escaped from the zoo. While walking around town, he sees the bear. He can get the perfect shot, but the bear is between him and the Sun. No camera mode can help him; the camera keeps factoring the Sun in on the shot, overexposing the bear. He doesn't know how to manually set the exposure to just the bear. He takes too long fiddling with buttons before the bear scampers off. The chance is missed.

If the photographer had tried to learn how to shoot into the Sun or even had a basically understanding of aperture, shutter speed, and film speed, he may have gotten the shot. He never bothered to experiment with the settings. He didn't need to. Full Auto is good enough 90% of the time, and the special modes covered another 5%. Learning what lumens are is too hard and not worth it.

This is why having low-level knowledge, knowing why something works, is important. When confronted with a hard situation, underlying knowledge and the ability to improvise are why craftsmen will succeed and journeymen will fail.

Becoming a Craftsman is Discouraged

In software, hardware and code libraries are cheap; people are expensive.

In an effort to produce as much as possible for as cheaply as possible, companies routinely hire weak candidates. This is somewhat understandable. After all, recent graduates have to start somewhere. However, the former culture of teaching and growth is now discouraged. After all, the company has already announced that the new product will be shipping in three months, and no one has started working on it yet! Hurry up and hack it together! There's no time to learn how to make it good!

Of course, phrases like that don't go over well, so we are instead "encouraged to hit the ground running."

The engineers work long, exhausting hours, but they manage to ship on time. Because they succeeded, management thinks that the team is capable of creating the next project in the same amount of time or less. Eventually, the heavily-relied-upon craftsmen engineers unravel, and the journeyman and apprentices never get a chance to learn and become better.

In the short term, the company looks prosperous. Other companies try to model themselves after it. In the long term, the companies will fail, and the field as a whole will suffer.

Why Should Consumers Care?

As a consumer, you don't care. I understand that. I wouldn't either, except that this directly affects you.

The best engineers hate technology. I've always had a hard time explaining why, but I think Jacques Mattheij phrased why best in "All the Technology but None of the Love" (emphasis mine).

The 'hacker' moniker (long a badge of honor, now a derogatory term) applied to quite a few of those that were working to make computers do useful stuff. The systems were so limited (typically: 16K RAM, graphics 256x192, monochrome, or maybe a bit better than that and a couple of colours (16 if you were lucky) a while later) that you had to get really good at programming if you wanted to achieve anything worth displaying to others. And so we got good, or changed careers. The barrier to entry was so high that those that made it across really knew their stuff.

Of course that's not what you want, you want all this to be as accessible as possible but real creativity starts when resources are limited. Being able to work your way around the limitations and being able to make stuff do what it wasn't intended for in the first place (digital re-purposing) is what hacking was all about to me. I simply loved the challenges, the patient mirror that was the screen that would show me mercilessly what I'd done wrong over and over again until I finally learned how to express myself fluently and (relatively) faultlessly.

[...]

I think I have a handle on why this common thread exists between all those [writings by the "old guys"], in a word, disappointment.

What you could do with the hardware at your disposal is absolutely incredible compared to what we actually are doing with it.

It's amazing to see how the majority of software jobs exist solely to badly reimplement features that have been working for decades. A lot of the reason is because of the new software delivery paradigm: ship it as soon as it slightly works and make the user download updates.

Because everything must be coded quickly, things can't be thought out and tested as well as they used to. If there is a slight flaw in your design, it's not usually apparent until it is tested throughly. Then, it could cause a major (expensive) code rewrite that has to be deployed to users without breaking anything. Wedge Martin explains why.

I can't subscribe to the flawed philosophy that a developer shouldn't have to know how an application is talking to his database, or the fine details of what goes on in the underlying system or storage cluster. Those people are like ticking time bombs for some company to hire to build out their platform. They'll get your prototype out the door at light speed, but put any traffic on it and prepare yourself for a bill the size of a Pirates of the Caribbean movie.

The problem is that all the libraries we are using abstract away the need to learn anything. Here's a real-world example:

"Sockets" are network connections. I've written a library for my job that takes data to be sent and sends it with one command. Whoever used the library no longer needed to worry about transmit code or corner cases we'd discovered over time.

Then, someone else needed a more advanced socket feature. So, they added it to my code and make a second function that reused some of what I wrote. This is still good.

Then, someone else wanted a different way of calling these commands, so they wrapped them in another layer of code.

Finally, another person needed a different advanced socket feature, but only understood the wrapper code. So, they added another wrapper with weird hacks to get the socket do to what they wanted.

Over time, everything became a slow, unmaintainable mess. If all these extra features had been done on a lower layer of code, it would have been OK, but most of the coders who used the wrapper had never even heard the word "socket" before. They were, in essence, adding existing features on top of interface that already provided them.

This is not an uncommon story. This is a form of code bloat, and it's the reason why you need a new computer/phone every few years.

Code Bloat

Computer compilers can help make code run faster, but they won't fix your algorithms. All those software layers need to be processed when the code runs. More code means more processing. More processing means more CPU cycles which translates to the device taking more time and power to do the exact same thing. That's a reason why your computer that ran lightning fast when you first bought it seems to run so much slower after a few years and updates.

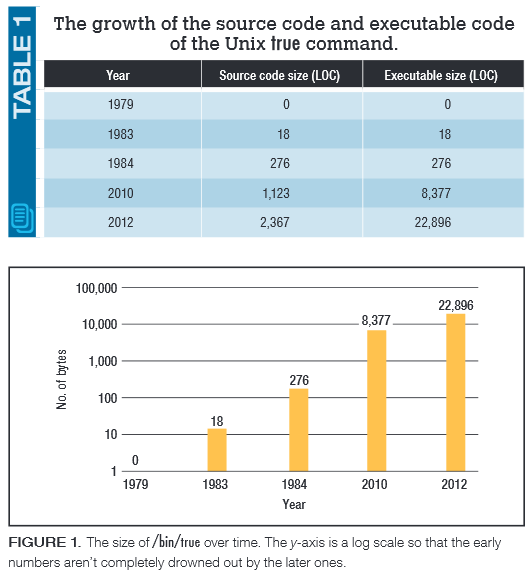

How bad has this gotten? Even at the lowest levels, code is bloating. Take the story of /bin/true take Gerard J. Holzmann has written (warning: link goes to a PDF file). In C, the code for /bin/true is this:

return 0;

That's it.

How large is this code now?

That's a lot of processing to get a guaranteed zero. The graph is compressed by the logarithmic scale. It if wasn't, it almost doesn't make sense how huge it's really become. The 2012 executable code is 127,200% the size of the 1983 code.

Using /bin/true as something "bloated" should be a hypothetical reductio ad absurdum argument in a debate class, not a real example of how big software has become.

The Inspiration for This Article

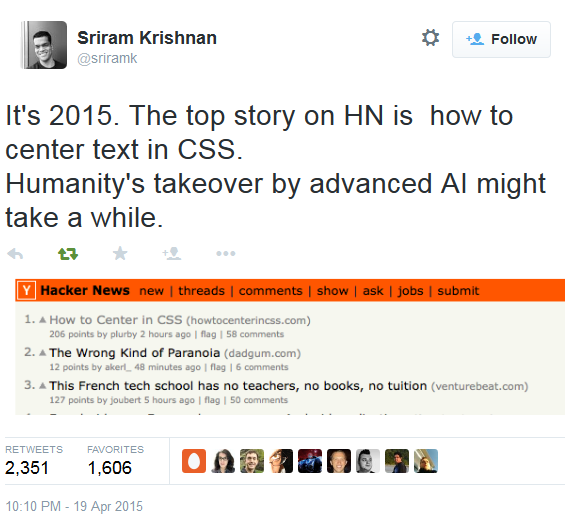

All this rambling been leading up to this. Things have become so bad that this was the top headline on Hacker News: how to center text on a web page.

Things have become so bloated and abstracted that many web developers don't know how to center something to the point where someone dedicated an entire website to text-align: center . Some people use jQuery to do this, so your browser needs to download a multi-megabyte library and a use ton of processing cycles rather than downloading the 18 bytes in the previous sentence.

So, next time your computer seems to take forever to load a webpage, that's why.

"Code Inflation" by Gerard J. Holzmann

"All the Technology but None of the Love" by Jacques Mattheij

"what bums me out about the tech industry (today)" by Wedge Martin